In-the-moment Customer Sentiment

Amazon Alexa

Overview

Created a long-term vision for collecting in-the-moment customer sentiment and satisfaction

My Role

UX Designer Intern

Timeline

May - August 2023

Team

Alexa Proactive Experiences (APEX): design manager, designer, researcher, product manager

Skills / Tools

Figma, Principle, Product Thinking, Multimodal Design, Voice Interaction Design, Conversation Design, Visual Design

⟡ Overview

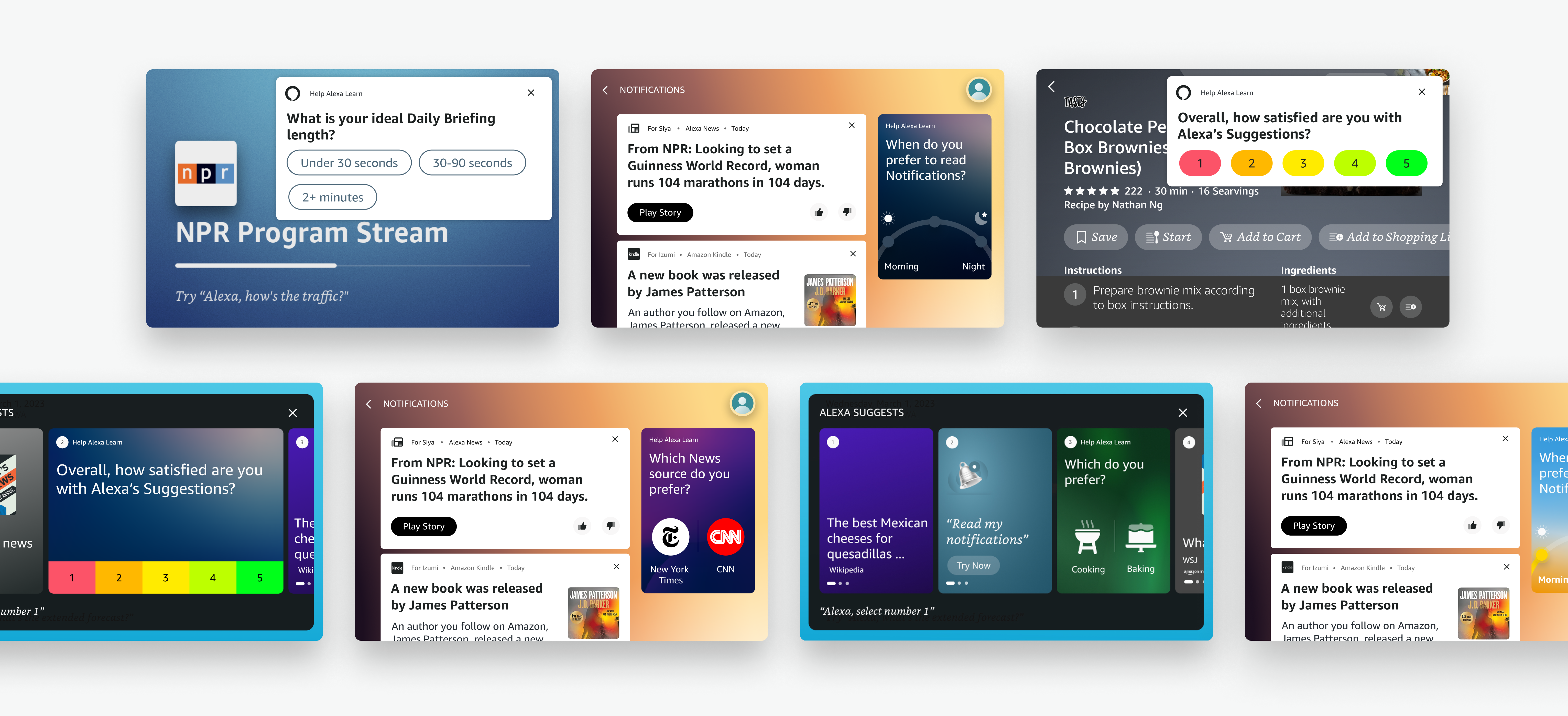

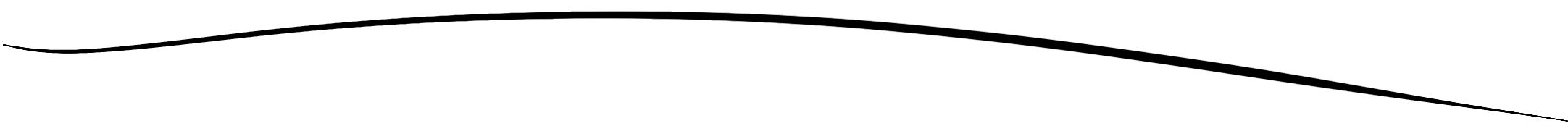

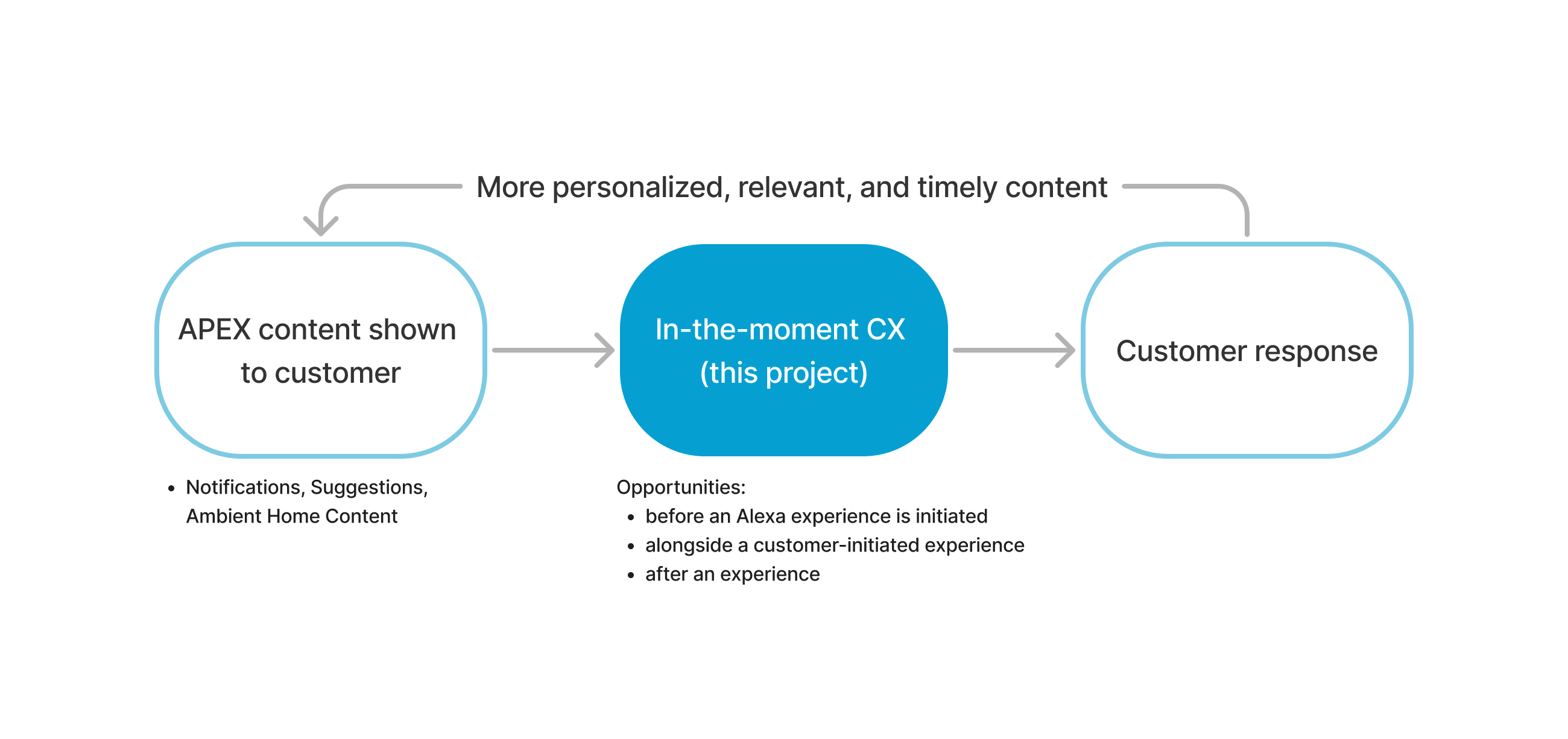

I was a UX Design intern on the Alexa Proactive Experiences (APEX) team. As a horizontal team, APEX integrates content from domain partners (i.e. News, Music, Recipes, etc.) to create personalized moments of engagement via notifications, suggestions, content optimization, and feedback across voice and graphical user interfaces.

Over the span of 12 weeks, I created a multimodal CX framework for collecting in-the-moment customer sentiment and satisfaction and presented my proposal to a cross-functional group of stakeholders, and achieved alignment.

Prototype of card CX pattern with time of day question on Echo Show device

⟡ Problem

Beyond 'Alexa shut up'

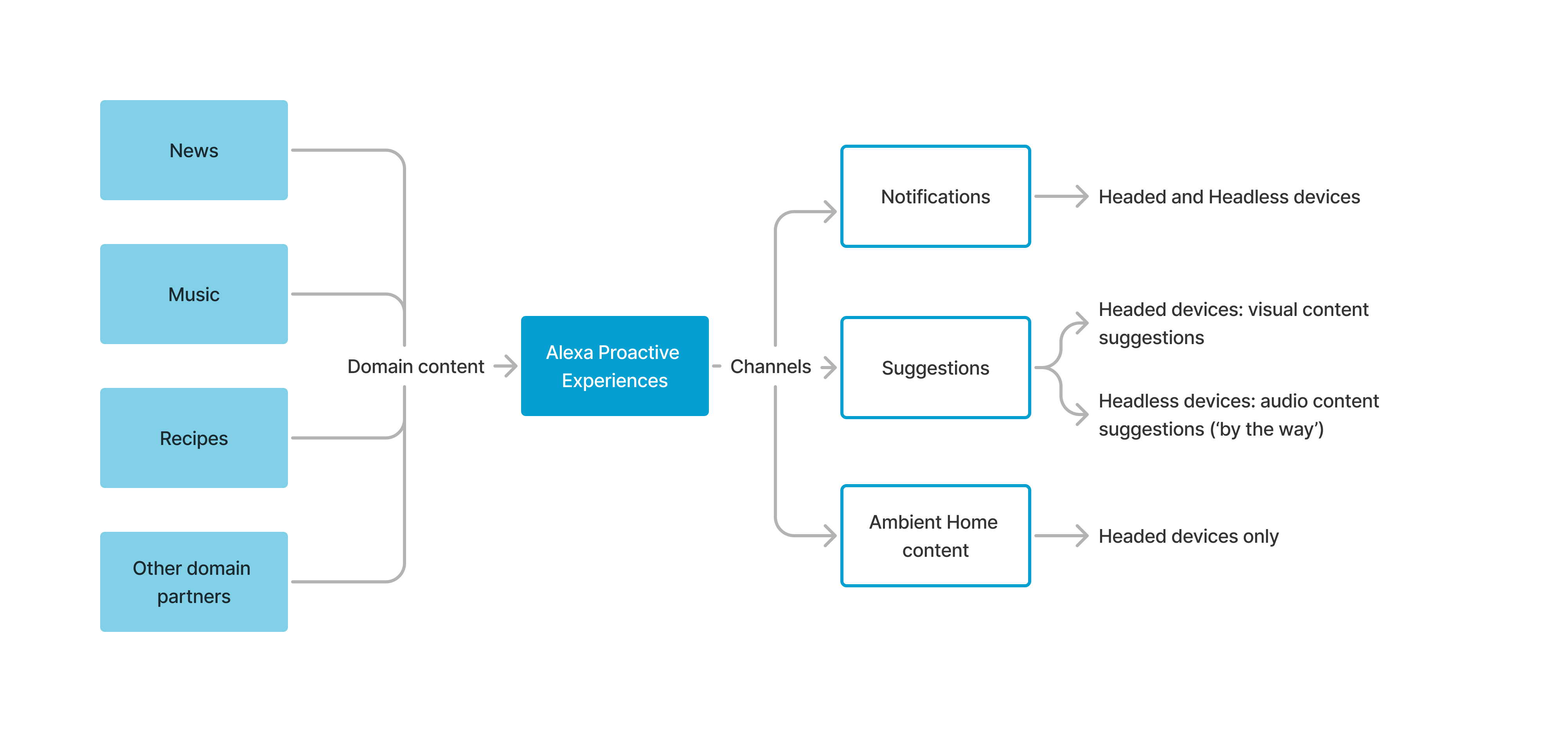

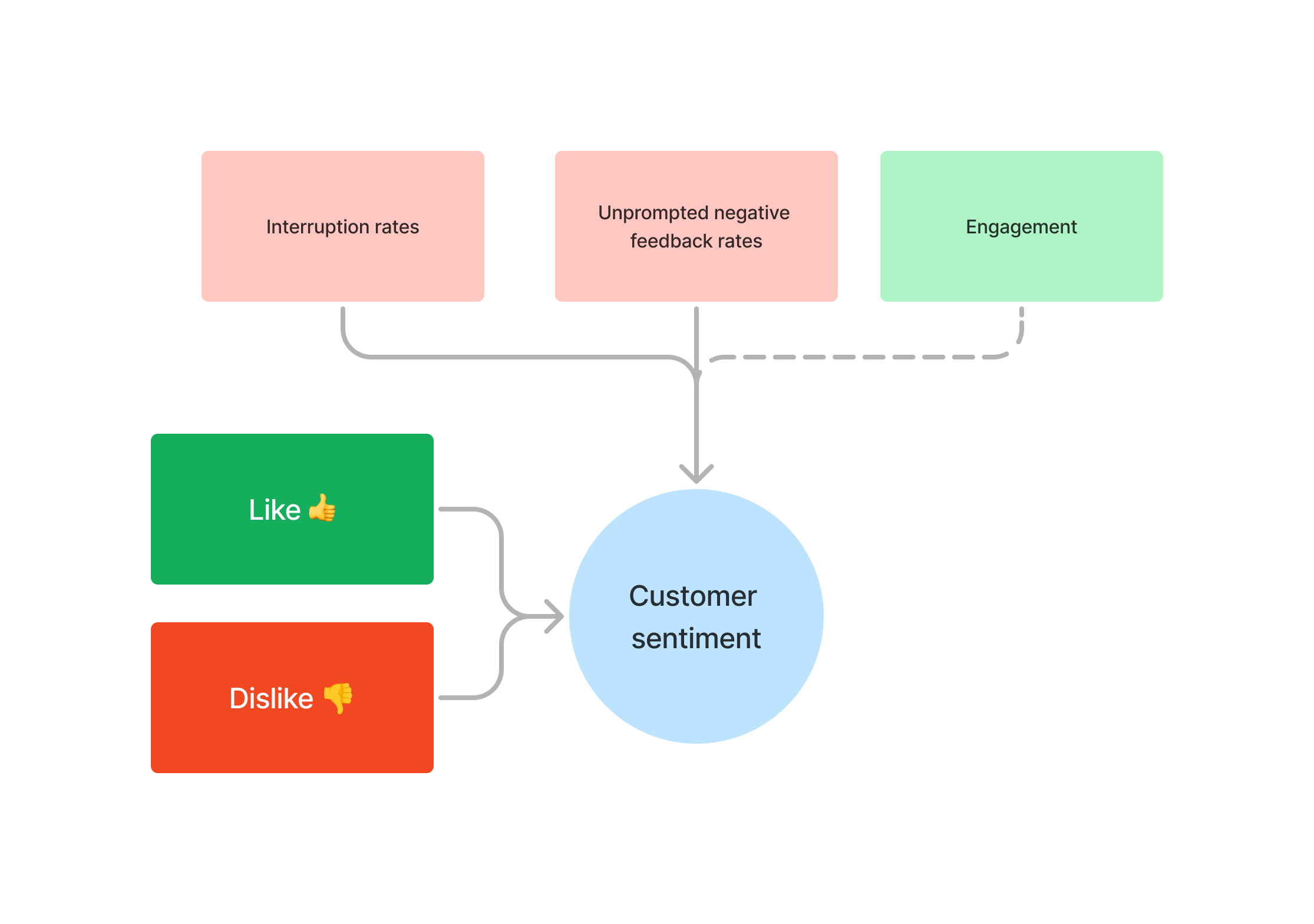

Currently, all reportable customer sentiment only captures negative sentiment. Positive sentiment is extracted from implicit signals like engagement.

In 2022, the APEX team conducted experiments collecting explicit customer feedback for select ambient home content and device notifications. The introduction of the touch-only Like and Dislike signals via thumbs up and thumbs down actions resulted in a significant increase in engagement → this suggests that customers are willing to provide explicit feedback.

Design Challenge

How might we expand in-the-moment customer feedback collection beyond negative sentiment to improve personalization, relevance, and timeliness?

⟡ Solution

Under the mentorship of the APEX team, I delivered a comprehensive Sentiment framework that:

- increased opportunities to solicit customer engagement

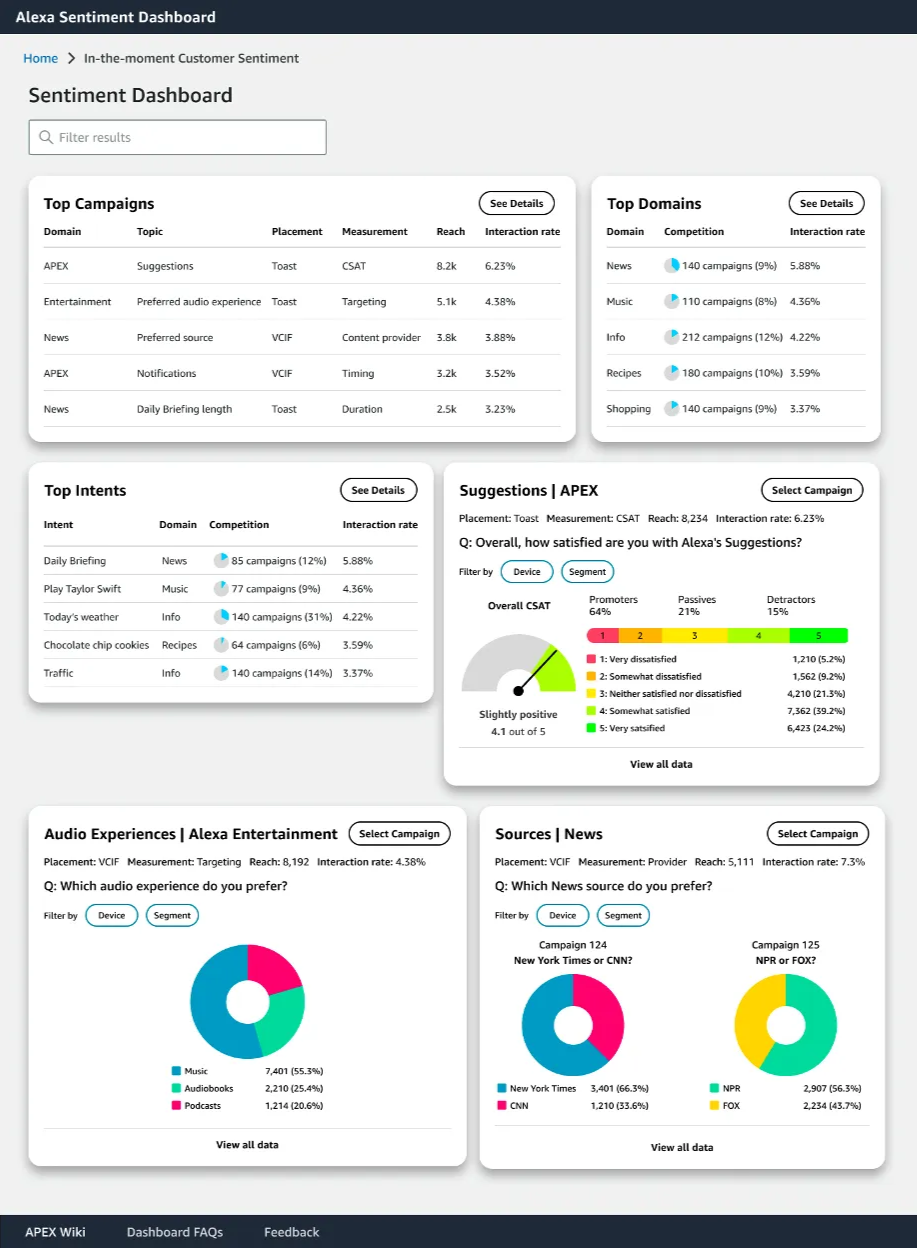

- produced scalable, quantitative metrics related to customer satisfaction

- provided actionable insights to improve personalization, relevance, and timeliness

All of which can be shared and referenced by domain partners to optimize content delivery and improve customer satisfaction.

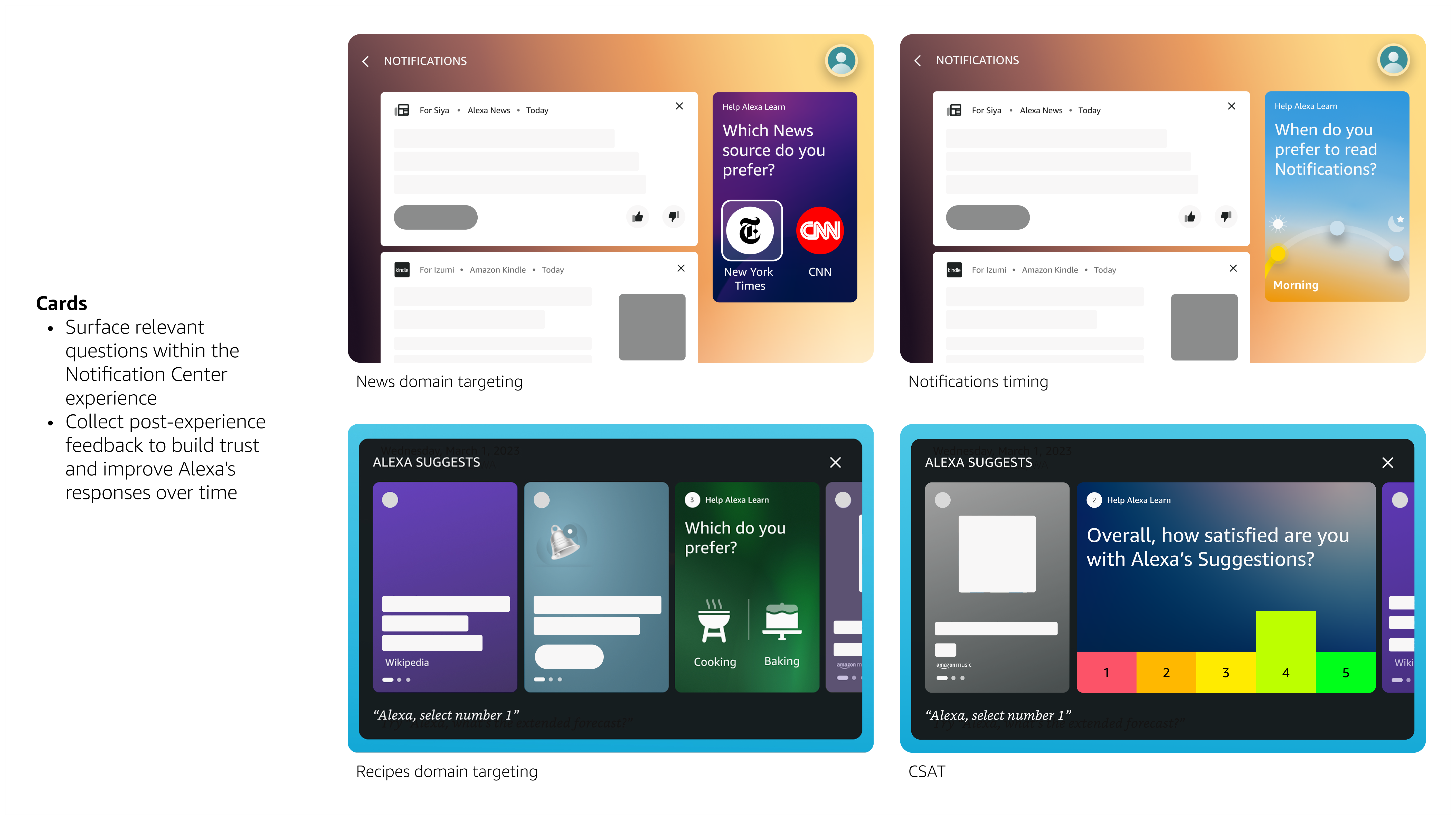

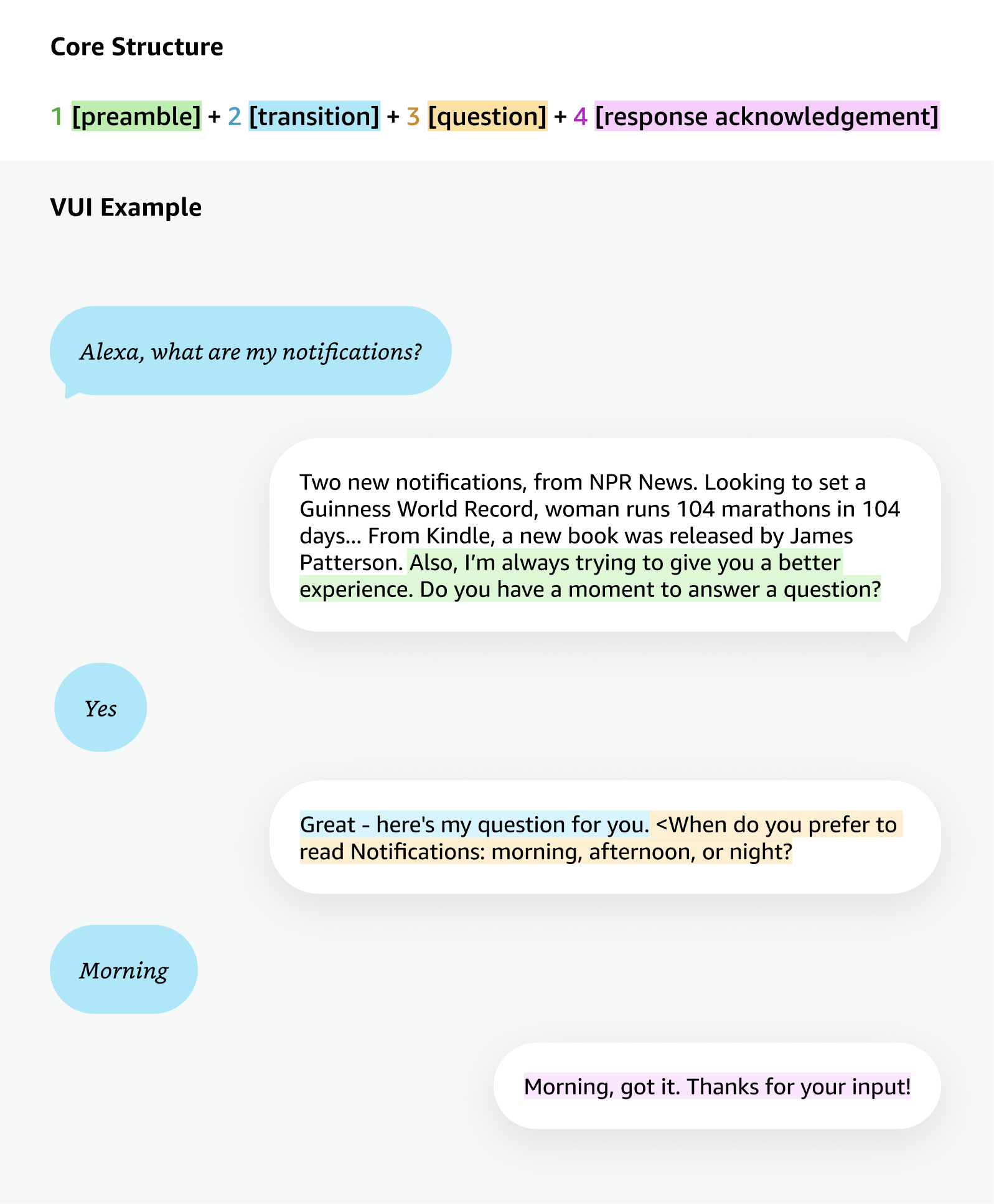

The Sentiment framework establishes a collection of scalable design patterns and questions that encourage active engagement, supports both voice and touch interaction, and expands upon existing Alexa design capabilities.

Conversation Design

Sentiment Dashboard

⟡ Designing for Scale

Alexa aims to be voice-forward, yet multimodal. As my first experience designing for non-mobile experiences, I learned a lot from my team in order to create a scalable and captivating multimodal design vision.

It was easy to feel overwhelmed by how much there was to learn about multimodal design; to center and ground my internship, I created 3 tenets to guide my decision-making process:

Prioritize experiences that minimize the time to submit feedback. Avoid double-barreled, multi-select answers, and wordy copy. Interactions should be single-tap and answer options should be easy to understand, visible, and equally accessible.

Customers are engaged with response experiences for a limited amount of time. UI displayed should be captivating and entice the customer to interact.

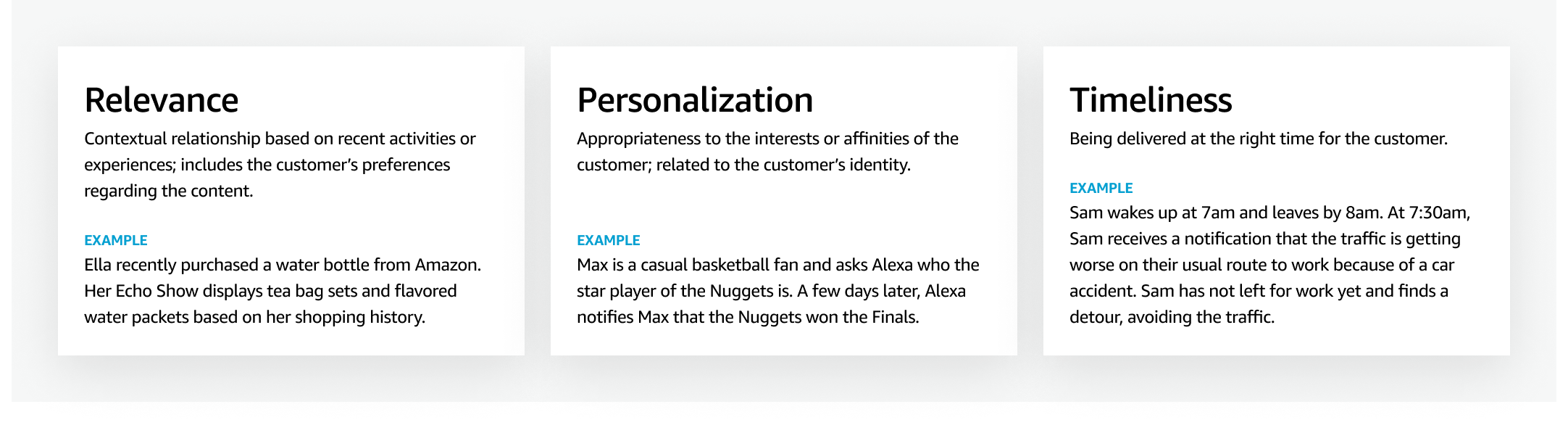

Customers are directly providing input to Alexa, which creates a sense of control. To maintain customer trust, information collected should be actionable and feed into efforts to improve personalization, relevance, and timeliness.

⟡ Actionable Insights

Before creating the design patterns, I first worked with the APEX researcher to establish the main customer sentiment themes identified from past user research.

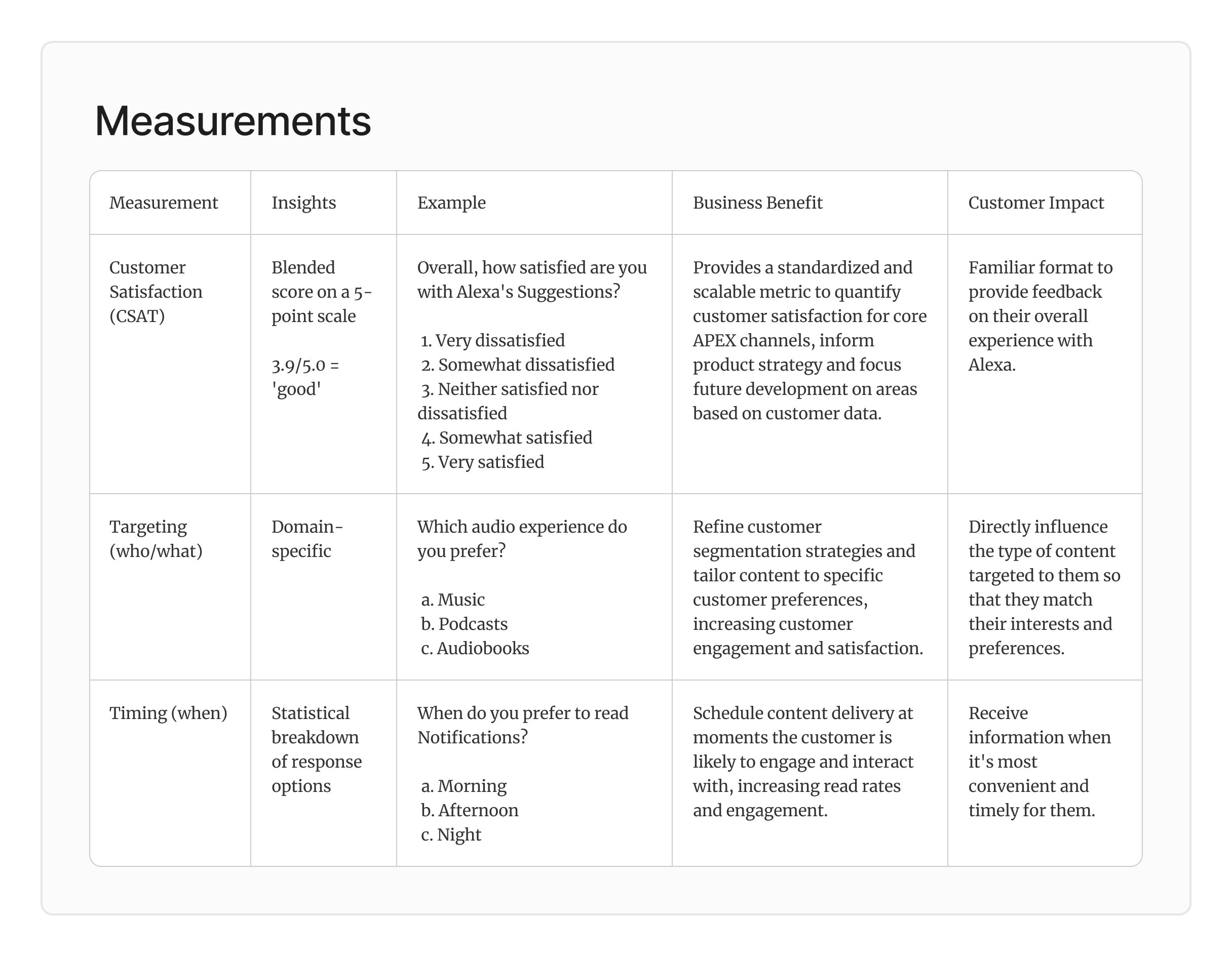

I decided to use CSAT, as it was the most relevant industry-standard sentiment/satisfaction metric for this project, and descriptive statistics for questions that mapped to themes of relevance, personalization, and timeliness.

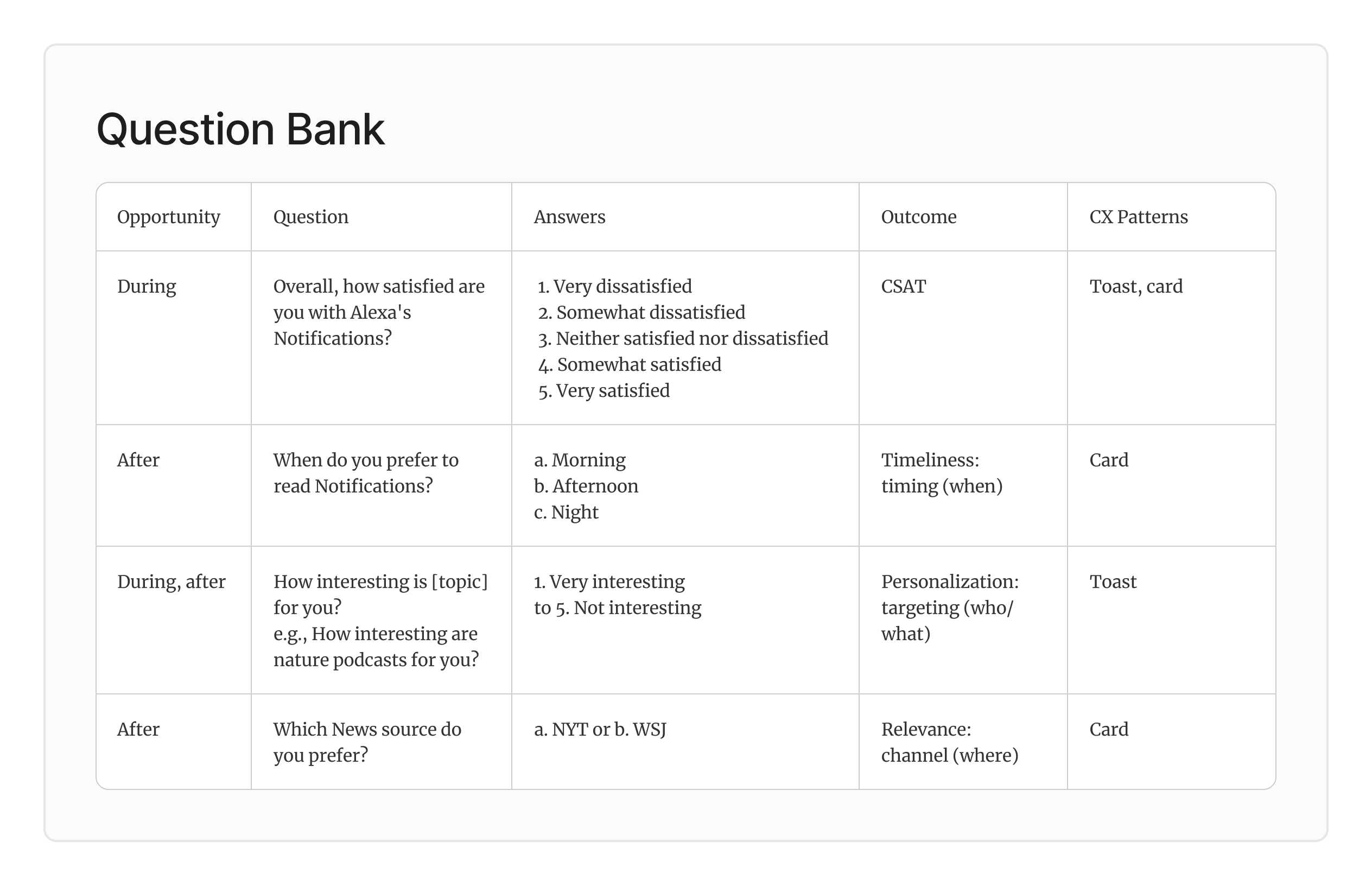

After defining the measurements, a question bank with about 10 questions per theme was created. Each question was associated with an actionable measurement.

⟡ Future Opportunities

Scaling Across Domain Partners

To expand this framework across APEX domain partners (News, Music, Recipes, etc.), implementation complexity varies by question type.

- Easier to implement: Standardized formats like rating scales, time-of-day questions, and CSAT scores require minimal customization.

- Requires partnership: Domain-specific multiple choice and either/or questions need collaboration with domain teams, Research, and VUI designers to ensure questions are unbiased and optimized for voice.

AI-powered Enhancements

Large language models could enable:

- Dynamic question generation tailored to each interaction

- Natural language feedback from customers

- Automated voice analysis for faster insights

- Real-time learning to prevent hallucinations and improve personalization

⟡ Results

⟡ Reflection

What I Learned

- Designing multimodal experiences — I had only designed for mobile and web prior to this internship, and learned how to design new voice UI and GUI interactions for a voice-first technology.

- Advocating for myself — I learned how to take initiative in meeting new people, being transparent on the scope of my project, and asking for specific feedback on my work. I also learned how to balance design needs with business needs, customer insights, and technical considerations.

- Stay committed, not attached — Having a flexible mindset and iterating on feedback were essential to my growth over the summer.